In his talk at the Breakthroughs in Computing (opens new window) series at LabWeek22 in Lisbon, Milan Cvitkovic, founder and CEO of Integral Neurotechnologies (opens new window), delved into the expansive and evolving field of neurotechnology. While the term brain-computer interface (BCI) might be more familiar to some, Cvitkovic explains that neurotechnology encompasses a broader spectrum. Neurotechnology is one of the most crucial endeavors for humanity, albeit one without a single intuitive goal like climate technology or longevity research. The field's diverse potential applications can make it challenging to present a concise argument for its importance without resorting to high-level abstractions. To illustrate the field's potential, Cvitkovic enumerates various goals, emphasizing that neurotechnology is not just about controlling devices with our minds. Its applications span from healthcare advancements to the profound enhancement of human capabilities, advocating for its recognition as a civilization-level priority.

Interested in the latest research and developments in the field of neurotechnology? Join us at LabWeek Field Building (opens new window), taking place June 10-16, 2024 at Edge Esmeralda (opens new window), a pop-up event city in Healdsburg, CA. Co-curated by Foresight Institute (opens new window), LabWeek Field Building will focus on deep dives and discussions on four main tracks — Neurotechnology, BCI, WBE; Human-AI Cooperation; Biotechnology and Longevity; and Extended Reality — and feature dozens of the leading minds and teams in these fields, including Cvitkovic. Go to labweek.io/24-fb (opens new window) to learn more and buy tickets.

# Overview of the Field of Neurotechnology [3:00 (opens new window)]

As I begin to share my thoughts, I'll flag from the outset that I will use the term neurotechnology a little more than I use the term brain-computer interfaces, or BCI. They are nearly synonymous. Neurotechnology is a slightly more expansive term.

Neurotechnology is, in my opinion, tied for the most important thing that civilization needs to be working on right now. Unfortunately, from a PR and field-building perspective, neurotechnology doesn't have a single intuitive goal the way that climate technology or longevity research do. There are so many possibilities and possible use cases that it can be hard to make a succinct argument for neurotechnology's importance without being so high level that you're risking a nosebleed.

I would liken the state of neurotech today to the state of the web in 1990. I think it was self-evident to the true believers who were building the web in 1990 that increasing communication and connectivity was important for society, but they couldn't point necessarily to a single killer use-case that would justify the whole thing. But similarly, it's self-evident to me how much value there is in increasing the precision and effectiveness with which we can interact with the brain and with our own minds. But I think that vision's a little vague for many people, and I think that vagueness is unhelpful.

So, I won't be able to do it justice here, but I want to start via some brute force examples to enumerate some of the goals of neurotechnology, because it's not only important to know what the stakes are for success in this field, but I also worry people's general conception of neurotechnology is often limited to the idea of controlling our phones with our brains or something (as great as that would be). In fact, that's kind of a crushingly narrow vision than I think people like me who work on the field think about it. So let’s kick off by defining some goals for this crucial space.

“The global burden of neurological and psychiatric disorders is the highest it's ever been and only getting worse.”

# Advancements in Healthcare and Beyond [5:19 (opens new window)]

The first and most obvious point to mention is the potential for neurotechnology to cure disease and alleviate suffering. It's hard to even put into words how desperate the need is here. About 21 percent of the global disease burden is caused by neurological and neuropsychiatric disorders… that’s one in five Americans. And if you leave out malnutrition, treatable communicable disease and injuries, it's closer to 40 percent of the global disease burden as measured by disability-adjusted life years (DALY), and that percentage has been steadily increasing, so concretely we're talking about neurological disorders like Alzheimer's, ALS, epilepsy, Parkinson's blindness and paralysis — and on the neuropsychiatric side, we're talking about depression, post-traumatic stress disorder, addiction, schizophrenia, the list goes on and on. Two-thirds of people with a known mental illness don't seek treatment, and that's just the tip of the iceberg. The global burden of neurological and psychiatric disorders is the highest it's ever been and only getting worse.

The point is that even if you're inclined to roll your eyes at the more futuristic stuff that comes later on in this talk, the therapeutic potential of neurotechnology alone justifies it being a civilization-level priority.

Over the past 20 years, the funding of mental health research in the US has been flat. So, despite the fact that the burden of mental health disorders is increasing, the funding for research to address that burden has remained the same. And the burden of disease is expected to continue to increase. I don't know how much clearer I can make it that there's a huge unmet need. But there's no starker example of the sorry state of the art than depression, which is one of the most common and severe mental health disorders.

Longevity fans: the brain is ultimately the thing you care about not dying. I would contend that it is likely to require special care and attention as much as it's more sensitive to treatment. At least, if you want to be sure that the treatment is preserving whoever you are.

Now at some point, it has occurred to most people that what counts as a disease or disability is a little bit relative. Fatigue, for example: the vast majority of Americans and, I suspect, people in the world use a nanoscale neurotechnology called caffeine every day to regulate their energy levels. It's so ubiquitous that fatigue in the morning is something we excuse as being before we've had our coffee, rather than just the acceptable state of biology. You could say similar things about pain before painkillers. So the natural question is: Why leave the status quo where it is? Is what we consider a good life or even a normal life today going to be considered torture by the standards of a civilization with adequate neurotechnology? Imagine how many people would benefit just from perfect control over their energy level. Imagine a degree far beyond what we can achieve with coffee or other drugs. Improvement in that one dimension of our conscious experience would unlock massive amounts of well-being.

“Why leave the status quo where it is? Is what we consider a good life or even a normal life today going to be considered torture by the standards of a civilization with adequate neurotechnology?”

On the other hand, how much well-being would be unlocked by a neurotechnology that generated perfect control over sleep? Imagine perfect quality sleep every time, or just being able to fall asleep whenever you wanted to, or being able to lie down for a nap and setting your brain to wake up in exactly one hour?

A dream neurotechnology for me, personally, would be neurotechnological earplugs when I get onto an airplane. I want to be able to turn on perfect, reversible deafness. Of course, we can imagine much more control over our sensory inputs than that from enhanced vision and enhanced hearing, all the way to Matrix-level virtual reality.

Let’s take it a step further. What if you could just set a timer on your phone to block any distracting thoughts for 30 minutes? Imagine enhancing working memory. What would it be like to be introduced to 15 people and effortlessly keep all their names in your head at once? What if you could literally commit something to memory in the GitHub sense of commit? Cue accelerated learning. I'm sure we would all love that.

# Seamless Communication Between a Human Brain and a Computer [9:08 (opens new window)]

The thing that people usually think of when they hear the term “brain-computer interface” is seamless communication with a computer's devices, tools and digital content, but also, in the future, hopefully seamless communication between people. And who knows? Maybe even someday with animals. A particular category of neurotechnology that's deeply important to me is giving ourselves the ability to behave like and hopefully become the sorts of people that we already wish we were on reflection: people with better impulse control, people who are more, open-minded, people who are more honest and braver with each other and with ourselves.

"A common factor in most — arguably all — existential risks to humanity is humans behaving in regretful ways or failing to coordinate or failing to look past their narrow self-interest, even in ways that they agree are bad behavior of themselves.”

To be clear, this is not some feel-good desire on my part. I would contend that a vast proportion of the problems we face individually and as a species come down to failures to behave in the way that we already want to. A common factor in most — arguably all — existential risks to humanity is humans behaving in regretful ways or failing to coordinate or failing to look past their narrow self-interest, even in ways that they agree are bad behavior of themselves. It is somewhat incredible the degree through which our instincts are not suited to the world we live in now. Of course, our lesser instincts had an evolutionary advantage or at least permissibility and we should be careful with meddling with them as we should be with any new technology, but all this is to say that my vote for the most underrated use cases of neurotechnology are things like control over mood and affect.

# Controlling Mood and Behavior [10:43 (opens new window)]

We all recognize in cases like depression and bipolar that people's moods are not a well-calibrated function of their external circumstances, but outside these pathologized cases, how often are the state of our minds really matched that well with what we're doing in our lives?

And what tells us that we don't usually think to tally it up because it's just the way things are? Why is it that when you go to lunch with your boring uncle, you can't just dial-up patience and kindness to the level that you're going to wish they were at as soon as you walk out of your boring uncle's house? I think there are absolutely ways to do this badly. We don't want people putting themselves into a stupor or running away from their problems. But these worries are evidence of an extremely impoverished vision of what we could achieve with adequate neurotechnology.

“I would like to suggest something that I don't hear much in the AI discourse, which is that neurotechnology, while not a silver bullet by any means, is an important part of a broader portfolio of AI safety and alignment efforts.”

Another underrated use case, I think, is improving rationality. For example, by the ability to flag or eliminate cognitive biases. Imagine thinking through whether to take a job offer and getting a ping from your phone that says, "Hey, it looks like your brain activity shows that you're suffering from status quo bias. Here's a note that you wrote to yourself last time you did this." Imagine being able to install or uninstall motivations, habits, predilections, aversions. You can just imagine hypnosis, but a thousand times more effective. Or even being able to generate or guarantee actual empathy by literally experiencing what someone else has experienced or will experience. There are actually some pretty crazy proofs of concept of this that have been done with VR, but they can go so much farther.

For a crypto audience, imagine if "I feel your pain" was a literally enforceable promise that you could make in a smart contract and not just something that people say.

And lastly, and probably most importantly, let's talk about AI. Artificial intelligence seems extremely likely to play a transformative role in society in the near future. And I don't have to convince you, I don't think, that this transformation is not guaranteed to go well. But I would like to suggest something that I don't hear much in the AI discourse, which is that neurotechnology, while not a silver bullet by any means, is an important part of a broader portfolio of AI safety and alignment efforts. The high-level intuition for this is simply that human values come from here, no matter your thoughts on moral realism or meta ethics in general. This one-and-a-half kilogram cluster of cells in our skulls is empirically the ultimate arbiter of morality. And if you think that's wrong, consider whether that one-and-a-half kilogram cluster of cells in your skull is playing a role in you forming that opinion. Now, we have introspection and language to get access to some of what's going on in here, and those are very useful. But a few thousand years' worth of attempts of using introspection and language to operationalize the moral judgments of this one-and-a-half kilogram cluster of cells hasn't yet produced anything that seems particularly suitable for aligning AI. So considering other approaches seems prudent.

# Exploring Use Cases of Neurotechnology [13:53 (opens new window)]

One potentially pivotal use case of neurotechnology regarding AI is obtaining greater quantity and quality of data on human values than we can get from language or other conscious expressions of morality, like voting. AI progress in the recent past has tended to be driven by large datasets. Human values are the things we want an AI to learn. Providing it with more, higher quality data on human values might be wise. What are these better data? One could imagine a neuroimaging implant that passively observes the brain producing moral intuitions or making moral judgments, especially if that was combined with real-world contextual data. It's one thing to verbally express a moral judgment about a situation, especially a hypothetical situation. It's another to watch it happen in real time, in context. And, of course, observing the brain's computations during these complex conscious moral deliberations gives more resolution on that process than can be expressed even if someone's just thinking aloud.

Another interesting use case might be direct measurements of subjective well-being in the brain, although first we'd have to disentangle what subjective well-being is at the neurological level in order to measure it. Now, of course, you have to avoid wireheading in such a case, and that becomes extremely important but potentially possible. Another potentially valuable use case for neurotechnology related to AI is for figuring out which aspects of any of the brain we would like an AI to emulate. The human brain may be the closest thing we have to an optimizer that is aligned with human values, and so emulating aspects of its operation might be very useful for designing safe AI.

In the limit of understanding everything about the brain and being able to simulate it, of course, you'd have whole-brain emulation (WBE) which would be aligned with at least one human's values by definition, whoever's getting uploaded. But less than perfect mimicry, more tractable kinds of mimicry might still yield more aligned systems on shorter time scales. Or, potentially, AI built to emulate the operation of the brain might be easier to test for alignment, especially if you have a functioning human brain to compare them to. This would be kind of a partial-emulation approach. This is obviously a big unknown and carries some risks of good ideas from neural computation accelerating AI progress, but I think it's well worth exploring.

I should make explicit the connection to neurotechnology here, rather than just neuroscience. It is that neurotechnology or neuroscience is bottlenecked mainly by tools, I would argue. So better neuroscience to learn these things would require better neurotechnology. And then finally, there's this notion of merging humans with AI, which, for example, Neuralink has cited as one of their long-term goals. It's not entirely clear how an AI-human hybrid would be structured, and it's generally thought that such hybrids would not be competitive with pure AI systems in the long run, but they may be useful during the early stages of AI development, especially in takeoff scenarios that are sensitive to initial conditions. That's a lot to think about. Again, none of the preceding is guaranteed to make transformative AI go well, and none of these things are likely to be relevant on a sub five-year time scale. I do not think that we should be diminishing our efforts on large language model safety or interpretability or governance right now. However, now also does not seem like a great time for civilization to be prematurely limiting approaches toward AI safety that it's exploring. And as I've hopefully made some kind of case for here, neurotechnological approaches seem like a very worthwhile addition to civilization's portfolio of alignment approaches.

# A Look at Governance and Use Cases [17:26 (opens new window)]

Lastly, on just this goals section, like any new technology, there are absolutely uses that I don't personally advocate for. I think perfect lie detectors in the hands of authoritarian governments would be one. Addictive technologies that don't come bundled with the addiction tools, accidental corruption or reinforcement of bad values, or giving AI systems additional attack surface on human minds. These are all outcomes I would not like to see. And I'll just say that I think good governance of potent future neurotechnologies is an important part of avoiding these outcomes and is something that I hope the brilliant mindset places like Protocol Labs (opens new window) can help find solutions for, in addition to society at large working on these problems.

OK, let's come down from the razzle-dazzle a little bit. Now that I've hopefully painted something of a picture of the value of neurotechnology, let's look at the state of the field today. And I'll just start by recapping some neurotechnologies that are widely used either in medicine or in society, in general.

First, it's only fair to start by mentioning the OG and still most widely used type of neurotechnology, which is small molecule drugs. We're all familiar with drugs; you probably take them every day in some form. Drugs have a pretty good UX for neurotechnology. Once they're in you, they last all day usually, you don't have to wear or charge anything. But they're hard to turn off once you've taken them, and despite their profound and diverse effects, they aren't particularly flexible or programmable. So while the historical importance should not be understated, and they're very useful as demonstrations of the manipulability of consciousness, drugs aren't going to get us to BCI on their own.

Also, just a shout-out to cognitive techniques, like meditation, hypnosis, brain-training software. I don't count these as neurotechnologies per se, but like drugs, they're used by millions, if not billions, of people every day, and the effects they have are instructive, I think, for neurotechnology development.

The category of neurotechnologies that people probably most associate with BCI are methods that interact with the nervous system via electrical signals. EEG — or electroencephalography — is one of the most common methods you might have heard of. It's nearly 100 years old. It's probably what's in most consumer products out there that brand themselves as neurotechnologies. There are different flavors of EEG. One example is non-invasive EEG, like what you might buy in a consumer headset. Another is invasive electrocorticography on the surface of the brain that's used in epilepsy treatment, for example, to localize seizures. The work measures voltage changes produced by the activity of large groups of neurons near the electrodes. And of course, electricity can be used to stimulate neurons as well as to record.

Electrodes can be made small enough to record from and stimulate individual neurons or small groups of neurons. This has been used for Cochlear implants since the 1970s. Tens of thousands of these Cochlear implants are implanted every year. A similar idea is used in retinal implants, although they've had slower adoption. But the idea is that you have some kind of external camera or receiver microphone, and that transmits signals to an array of different electrodes. In the case of a retinal implant, that interfaces with the retina. On the right here — most people haven't heard of deep-brain stimulation, but it's the most commonly performed surgical procedure for Parkinson's and a number of other treatments. It's used if drugs don't work or stop working. Around 150,000 deep brain stimulation implants are inserted or implanted every year. So, it's also a very useful case study for purging neurotechnologies.

Another widely used technology I'm surprised people don't know more about is transcranial magnetic stimulation or TMS. It's a non-invasive method; it works by electromagnetic induction. It's FDA-approved for depression, anxiety, OCD, and smoking cessation. Statistics are a little tricky, but it seems like 50,000-plus people a year use it. It's not super precise, in terms of where it targets, but lots of improvements are being made. Recently, a new protocol called the SAINT protocol was developed that, in its first trial, had very high remission rates for depression, and the startup is now commercializing that.

Another technology to mention is MEG or magnetoencephalography that measures magnetic fields produced by the currents in the brain. It's not super widely used today because the hardware and shielding required is pretty hefty. But the underlying physics are advantageous compared to EEG because magnetic fields are less distorted by the skull and the scalp. So in principle, you could read a lot of information from the brain from an advanced MEG system.

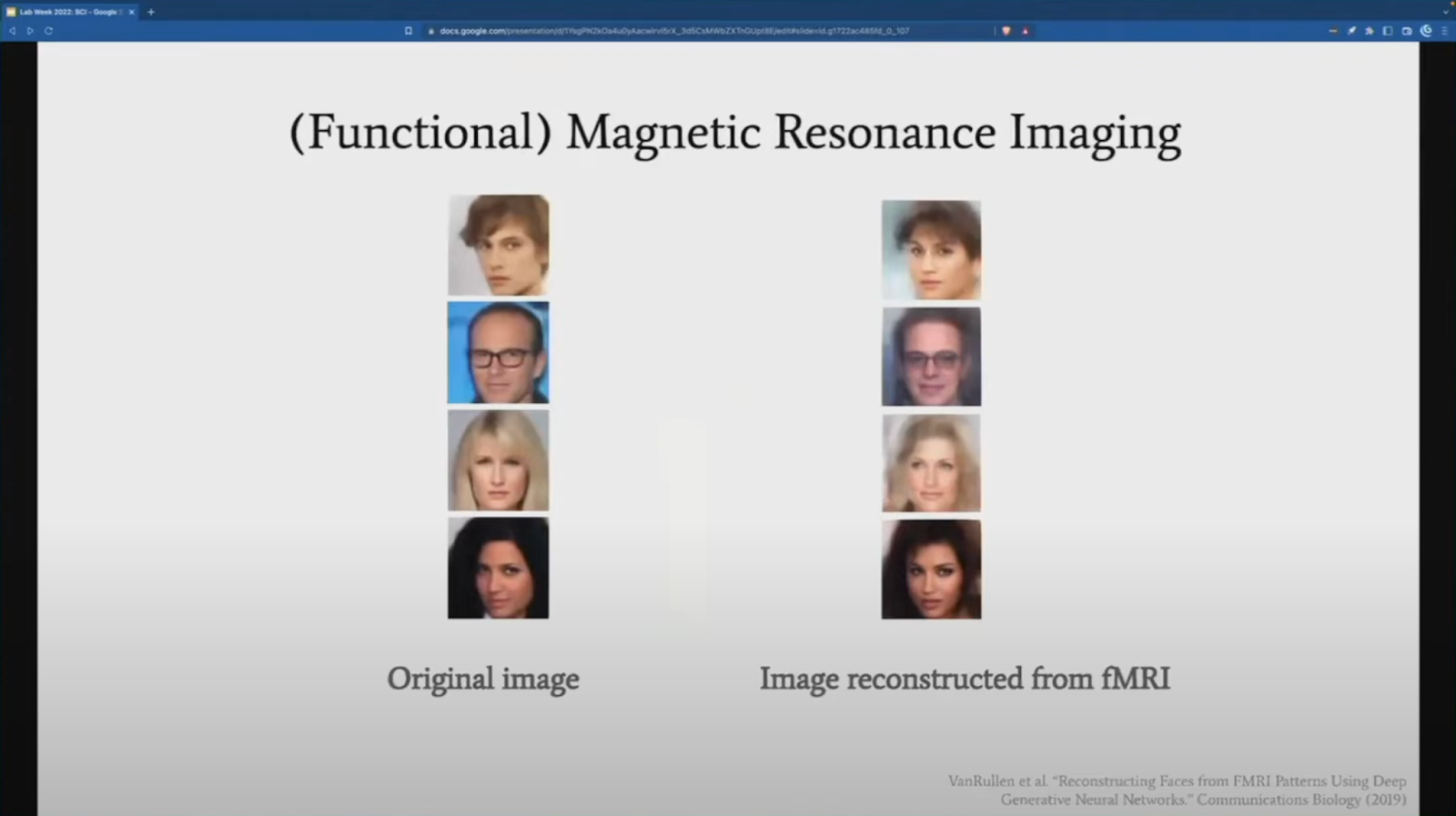

And then we have fMRI, which I'm sure people are familiar with, or functional MRI when you use it to detect neural activity. The changes in blood oxygen that fMRI picks up are slower than the electrical signals that move around the brain. They tend to be on the order of half a second to a second. So you're not going to want to control your phone using fMRI anytime soon. But fMRI is non-invasive, and it can image the whole brain, which are great features. But even with the slow time scales, you might be surprised what you can accomplish with these signals.

Here is an example where researchers train a deep generative model on fMRI activity and using that model, they were able to show a person an image of a face like the ones on the left, watch their brain activity with fMRI, and then reconstruct the image with that model from only the fMRI data.

# A Look at Frontier Tech in the Next Five Years [22:56 (opens new window)]

OK, this is all stuff you've probably heard of. What does the frontier look like now? By my frontier, I'm defining that by being used in humans, which is a critical milestone in any neurotechnology's life.

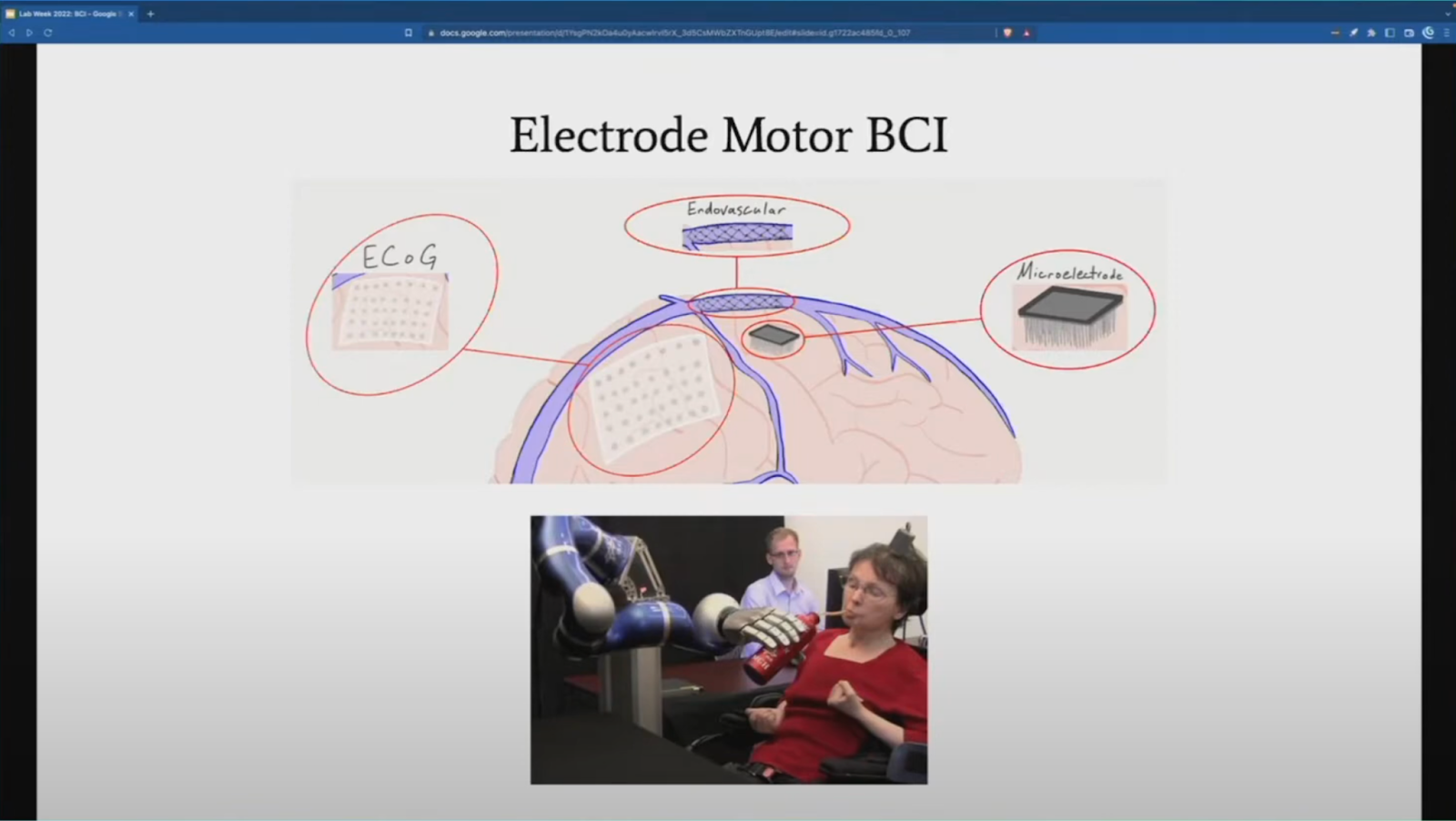

The next-generation technology most people are familiar with are electrode-based motor brain-computer interfaces. The basic idea here is to put a lot of small electrodes very near the neurons responsible for motor function in the cortex in the outer part of the brain and determine a user's movement intentions straight from those neurons from the activity of those neurons.

There are a variety of different form factors that this can take. ECoG is electrocorticography. That's what we saw on the slide before. The most widely studied systems for motor BCIs are these microelectrode arrays, which is what the patient gets implanted. There have been over 30,000 patient days of inpatient research with these microelectrode arrays. So there's really no question at this point that the principle works. It's a matter of improving the devices themselves in a variety of ways — making them smaller, having fewer adverse effects when implanted, and of course getting them approved by regulators.

Another approach you can take to move things forward is to place electrodes in the blood vessels near the neurons of interest and not directly in the brain, which you can see in the middle here, that's the endovascular approach. The advantages of approaches like this are that you don't have to drill holes in the skull. You can just go in through a vein, somewhere in your extremities and drop these electrodes off near the neurons they need to listen to.

Synchron is a company that's pushed this method the furthest. At the moment they have implanted, I think, five patients so far, all severely paralyzed and four of them have had the implant for a little over a year and supposedly have used them to send text messages and manage their finances and things like that. All four of those long-term patients are in Australia, where it's a little easier to do human research of this kind. It's also worth mentioning you can make a motor BCI that doesn't involve the brain, so on the left is a prosthetic arm that reads signals from the nerves in the upper arm. On the right of the system from Meta, that is a formerly Facebook Meta — they acquired a company called Control Labs that makes this hands-free gesture detector for use with VR systems, so you don’t have to hold anything while you’re using VR. It's based on electrical signals from the muscles in the forearm, so it's not exactly a BCI, but it's pretty close.

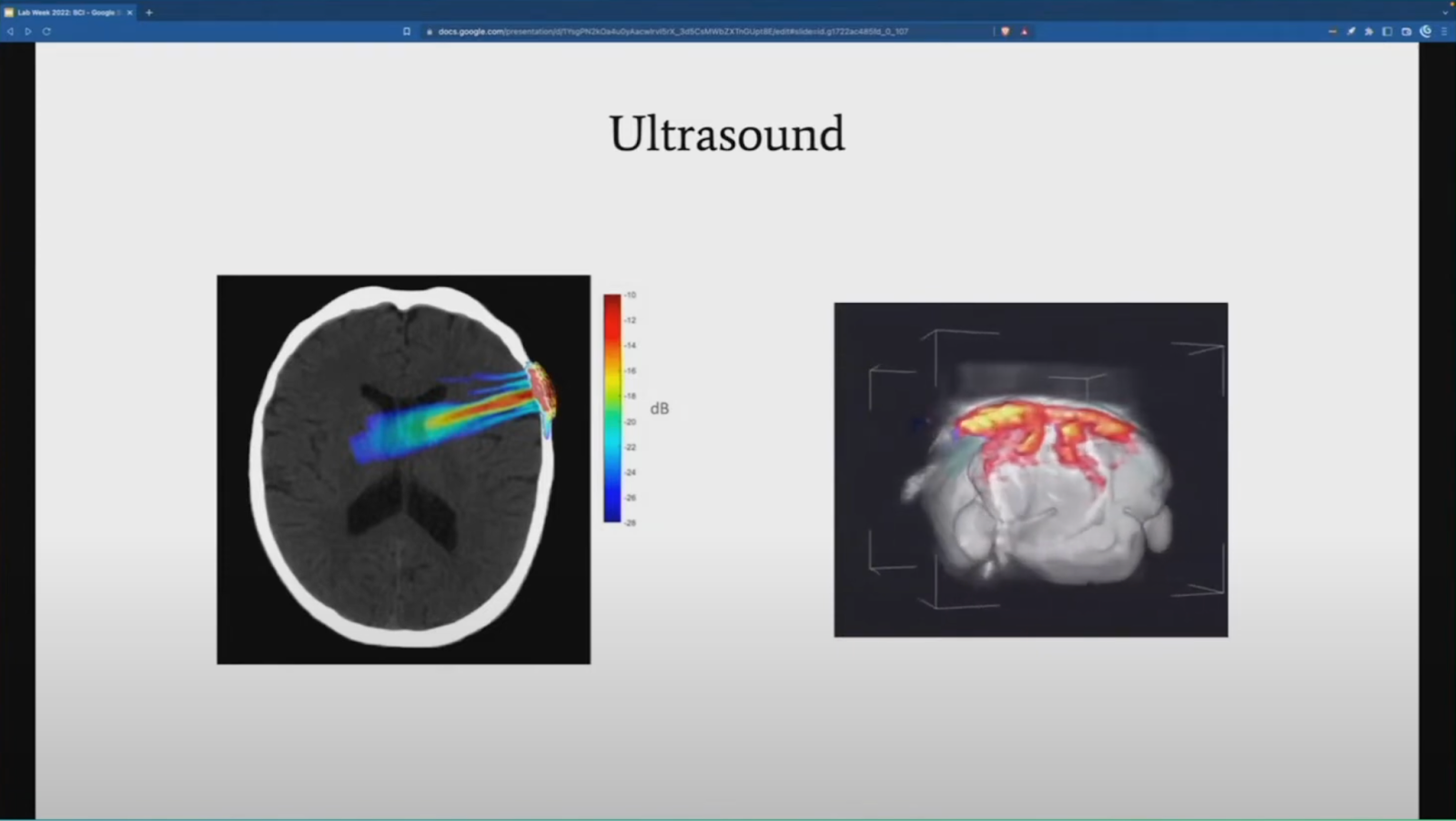

Another emerging neurotechnology is ultrasound-based methods. One type of ultrasound neurotechnology is already FDA approved — I just didn't mention it in the previous section because it's more of a surgical technique. This technique is called high-intensity focused ultrasound or HIFU. In HIFU, waves as sort of depicted on the right here can be focused on a target in the brain and then used to destroy tissue to ablate tissue like tumors or neurons that are damaged by Parkinson's disease. This is a non-invasive procedure in the sense that no physical matter is entering the body. The ultrasound waves are entering the body, and it's used by at least hundreds of patients every year and maybe more, so that you basically lie with your head in this dome in an MRI scanner and then the ultrasound is targeted to the area of interest.

But ultrasound is just a generally very useful physical phenomenon beyond ablating tissue because it propagates readily through soft tissue, and it has a very long safety record —we're comfortable with imaging fetuses with it — among other nice properties, and it's currently in clinical trials for a lot of other things besides this surgical use case. For one, if you take these hyper systems and turn their power way, way, way down to the point where it's not damaging the tissue, it turns out that even when you do that, ultrasound has some kind of local effect on neural activity where that beam is focused. This goes by a few different names, but you can look up a transcranial ultrasound stimulation (or TUS) to find it. How this works isn't well understood yet. If you talk to people who have had it done, there are certainly some undeniable short-term effects. It seems though, of course, it's not clear what those are indicative of or what they'll be useful for, but transcranial ultrasound is currently in trials for a number of things and humans. There's a trial going on for epilepsy, for depression, PTSD, a few other things ultrasound can also be used for imaging, similar to fMRI shown on the right here.

This is a mouse brain, not a human brain, but the the spinny GIF was prettier. But it's being trialed in humans as well as mice. Unlike an fMRI, though, they're potential for people to actually be able to wear an ultrasound imaging system like this or have one implanted, which opens up a lot of exciting possibilities for the future.

Near-infrared spectroscopy or functional near-infrared spectroscopy (fNIRS) is another family of methods. Kind of like fMRI, they look at changes in blood oxygenation, although they can look at other things. But like ultrasound, it seems much more tractable to make into a wearable form factor than an fMRI machine, and I know that because people have done it, so on the right side here is a device made by Kernel. It's called the Kernel Flow. They were at some point doing a clinical trial. You might actually be able to go use one of these if you're in the Los Angeles area. I went and tried one at one point this uses a particular type of fNIRS called TD-fNIRS. Current NIRS techniques don't allow imaging the entire brain, like fMRI does. They're limited to, I think, about a centimeter of depth, but it's in clinical trials for a variety of applications all as an imaging tool.

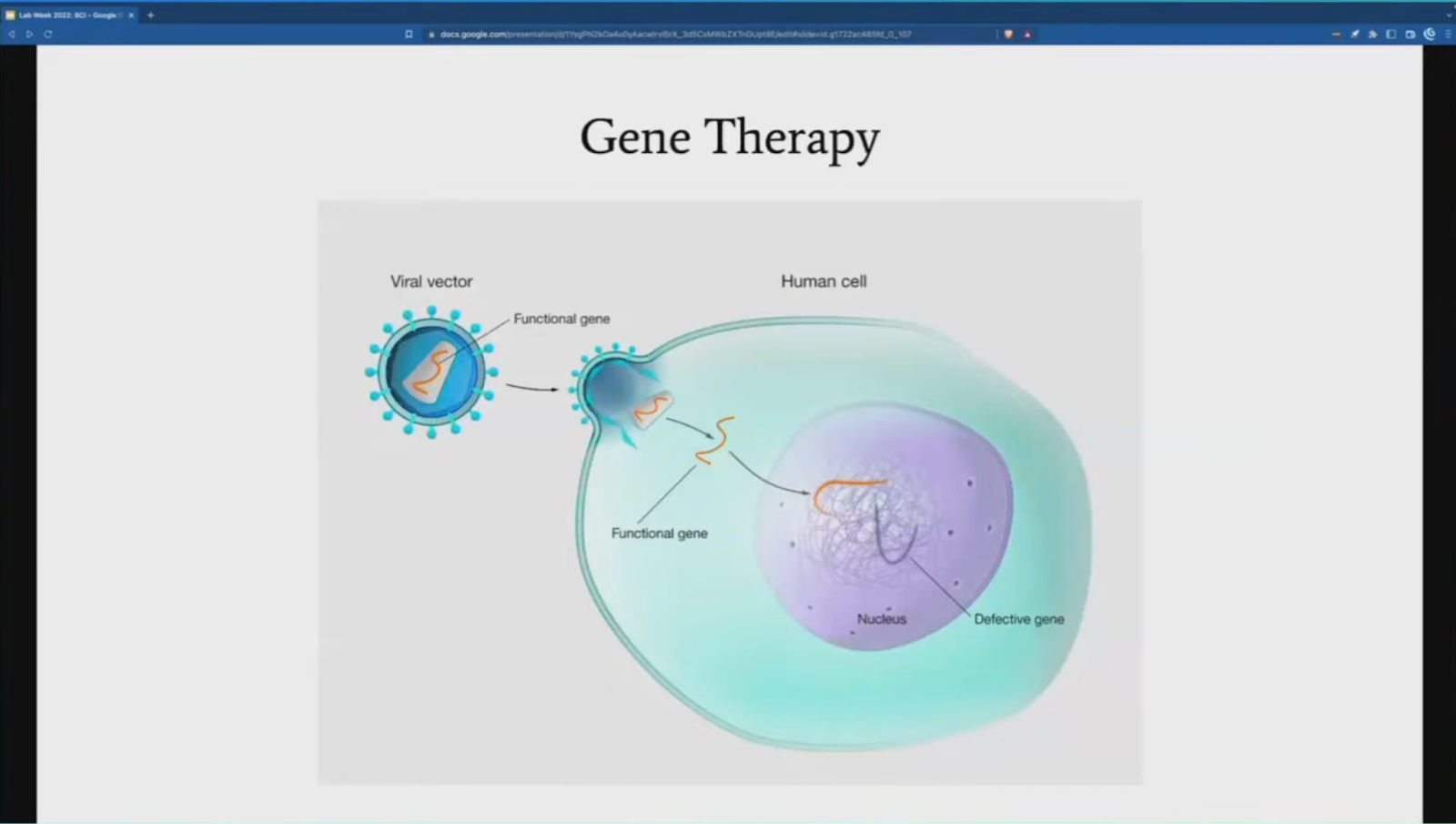

And then lastly, gene therapy is a very hot area for biotech and therapeutic development, in general, and is also being applied to the brain. A gene therapy, if you haven't heard of it, is a technique that introduces genetic material into a cell. There have been thousands of clinical trials for gene therapies for disease treatments at this point. Most of those are not targeted at the brain, but some are. The main challenge when you're making a gene therapy tends to be delivery. How do you get the genetic material into the cells that you want? One way to do that is to package the DNA or RNA into some kind of lipid or polymer. If you have received an mRNA vaccine for COVID, then congratulations, you've had this type of gene therapy. But another way of building these vectors is to, I think this is fair to say has more research on it at the moment, is to use viruses to deliver the genes.

These delivery vehicles are called viral vectors, shown here in this image on the top left. Viruses are nature's experts at sneaking around your body and inserting genetic material into cells, and so they can be engineered, hopefully, to do so with specific useful properties, like selectively targeting certain types of cells or tissues. As I said, most of the gene therapies that are in clinical trials now are related to diseases, like Alzheimer's or Parkinson's — we're talking about neuro gene therapy. But because you can fairly arbitrarily swap out the genetic information in a viral vector to whatever you want to encode different proteins, once some of these gene therapies are developed for neurological diseases, they're likely to be a massive enabler for neurotechnology, in general. It's kind of like having a general deployment platform like AWS and you can just swap out the code that's running on it. So that metaphor, the VM is the viral vector and then the functional gene is the code.

# Themes for the Future of Neurotechnology (31:35 (opens new window))

I wanted to pause here briefly and just empathize with possibly some members of the audience. If you're anything like me when I started neurotech, you might be thinking that this all just seems like a massively scattershot set of tools and techniques and might be wondering if there's a neat ontology that these all fall into. If there is, I don't I don't know of it. Neurotechnology isn't quite as simple as just fabricate more and more smaller electrodes and stick to more places, although it's not a bad approach, necessarily. But brains are complicated biological systems and there are so many ways to interact with them, from physical forces, a different biological substrates to interact with, different routes into the brain itself, you know the vascular drill-a-hole that kind of thing; so it's not clear which neurotechnological approach is going to ultimately lead to which degrees of control over the nervous system and what effects each one will have. So I just wanted to acknowledge that feeling, if you're feeling it. And also I wanted to suggest though that this huge frontier is one of the things that makes neurotechnology so exciting, there are so many paths toward progress and good ideas to be tried. And with that framing I can't predict the future, but I want to go over some general themes for the future of neurotechnology…

To watch the rest of Milan Cvitkovic’s talk, go here (opens new window).

If you want to learn more about Protocol Labs, subscribe to the PL Updates newsletter here (opens new window).